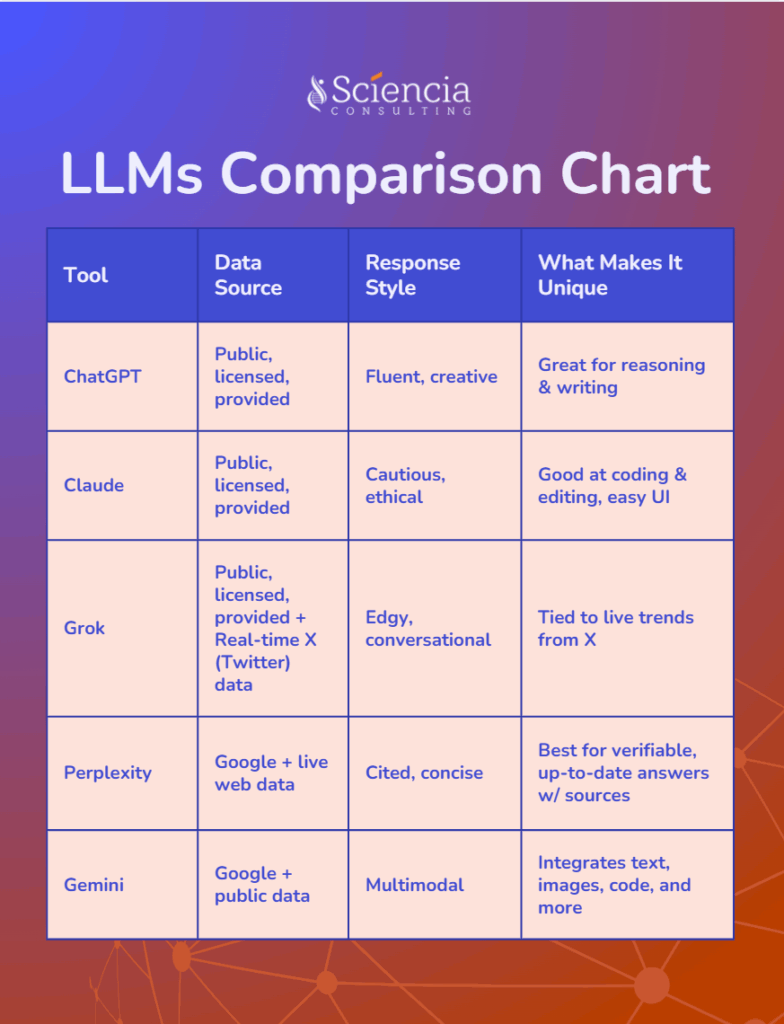

With all of the AI co-pilots available today, it’s easy to get overwhelmed with so many choices. Whether it be writing, coding, researching, or experimenting with creative pursuits, understanding what sets one AI assistant apart from another matters. In terms of life science, if you are in need of creating proposals, visualizing DNA structures or diagrams to explain CRISPR technology, AI can essentially pilot alongside you to maximize content creation. This project was aimed at breaking down the most popular large language models (LLMs) and providing a simple side-by-side comparison to help users make the best decision on which tool to use during the life science content development. Read our blog to learn about how we did testing and our results, and learn to choose the AI co-pilot that best fits your needs and preferences.

Overview

We performed a comparison of five significant LLM-driven AI co-pilots: ChatGPT (OpenAI), Claude (Anthropic), Gemini (Google DeepMind), Perplexity, and Grok (xAI). We learned about how the tools were developed and examined the strengths and weaknesses of each model within science communication tasks, writing tasks, and project implementation. Each AI tool’s different responses to the prompts were given a rating out of 5 in how effective they were at performing activities such as researching, creating diagrams, outlining, and handling timelines.

Methodology: How We Tested

All AI tools were given the same task-directed prompts, such as a summary of a science article, the generation of science images, and the construction of a project plan. Responses were graded on a 1–5 point scale for accuracy, clarity, and usefulness. In terms of research, technical reports and product documentation were also utilized to discover each model’s design and data source.

| Rating | 1 – Very Poor | 2 – Poor | 3 – Okay | 4 – Good | 5 – Excellent |

| Expectations | Fails to meet expectations | Barely meets expectations | Somewhat meets expectations | Meets expectations with minor issues | Fully meets or exceeds expectations |

| Description | – Largely irrelevant, incoherent, or factually incorrect – Severe misunderstanding of the prompt – Missing critical content or completely off-topic – Did not listen to user’s intent | – Incomplete or off-target response – Frequent errors or misunderstandings – Little effort to engage with user intent – Lacks organization or clarity | – Responds to the prompt but misses key details – Some errors, vague, or lack of clarity – May misinterpret part of the task – Response structure is functional but basic | – Mostly accurate and relevant response – Minor errors or mistakes – Clear communication that responds to user’s intent – Lacks depth, nuance, or could be refined more | – Accurate, complete, and directly answers the prompt – Clear, well-structured, precise language – Insightful or creative value beyond basic expectations or human-like response – No factual errors or misunderstandings |

These were the prompts that we tested on each AI tool and why.

Real-time Information Retrieval

Prompt: What are the most recent updates on the Phase 3 trial for rocatinlimab?

Why: Testing with this prompt evaluates each AI’s ability to access up-to-date information from the web, along with how accurate it is in terms of the scientific and medical context. Being able to summarize the most recent updates for clinical trials of specific medication is crucial to see whether AI can distinguish between outdated and fresh data, especially whether it is able to cite sources from reputable websites.

Literature Summarizing

Prompt: Summarize the key findings of this paper: https://pmc.ncbi.nlm.nih.gov/articles/PMC11901500/

Why: One of the key features of most AI is how it can help users digest difficult or dense papers into a simple summary that emphasizes its key points. By testing with this prompt, it not only tests whether the AI can access hyperlinks, but also whether it is able to extract core findings and conclusions directly from the paper into understandable and concise summaries.

Collaboration and Communication

Prompt: Write a professional email to a collaborator asking for access to raw sequencing data from our shared study.

Why: Scientific collaboration requires clear, respectful, and concise communication. By using this prompt, we can test the AI’s ability to write professionally and within the scope of the scientific community. In terms of emails, if done correctly, AI can help to reduce miscommunication and improve clarity overall.

Title Creation

Prompt: Suggest 5 catchy titles for a blog post explaining RNA vaccines to a non-scientific audience.

Why: One of the key issues from AI is its limited scope in creativity, which often requires a human touch. We used this prompt to assess whether AI is capable of not only generating unique and catchy titles, but also appeal to a specific audience within the scientific community.

Content Outlining

Prompt: Create a content outline for a blog post explaining how CRISPR works in plain language for a science-literate audience.

Why: Similar to the previous prompt, we used this prompt to evaluate whether AI is able to appeal to a specific scientific audience. However, we also tested whether each tool is capable of organizing information logically, includes all of the key information and covers technical concepts effectively.

Scientific Text Revision

Prompt: Rewrite this paragraph to be more concise and clear while keeping scientific accuracy.

Why: This prompt measures the AI’s ability to edit a specific text while maintaining clarity and scientific accuracy. An issue that often arises nowadays in terms of AI writing is how generic and similar it often becomes, removing the human touch of the text, thus demonstrating how crucial it is to improve academic writing in a way that does not lose its voice.

Image Generation

Prompt: Generate a diagram showing the steps of the CRISPR-Cas9 gene-editing process in eukaryotic cells.

Why: In terms of the scientific community, generating diagrams is crucial for teaching and learning in science, allowing access to accurate, useful and understandable images that help to support texts. More than half of the AI tools we tested still fail to be able to do this, or do it in a way that is accurate.

Presentation Preparation

Prompt: Turn this research abstract into a 5-slide presentation outline suitable for a grad-level journal club.

Why: Not only would it improve the efficiency and speed of creating presentations of the information scientists may want to present, it also tests the AI’s ability to differentiate between information and what is the most important to include that is audience-specific.

Project Management

Prompt: Plan a 3-month content project to publish a white paper and 3 blog posts on AI in immuno-oncology clinical trials, targeting pharma execs. Break it into weekly tasks across research, writing, review, and publishing, including team roles, timelines, and dependencies in a table format.

Why: This prompts tests the AI’s ability to plan for the future and in a long-term time frame, pushing it to have to think strategically and logistically by breaking down a big project into smaller and manageable steps, all while maintaining a structure that is easy-to-read.

Results: What We Discovered

We recorded each of the AI tools’ responses in a document:

https://docs.google.com/document/d/1-9zWJ24EA_06UInSoO5ThChzGYDDkaJC10L4m-F7JcU/edit?usp=sharing

With this, we also kept track of our testing notes and why we provided specific ratings:

https://docs.google.com/document/d/1PlnCRhaiMLaEwRQ1nl6kDax3TARXTQJAm7Q0SEKe7dM/edit?usp=sharing

| Category | ChatGPT | Claude | Gemini | Perplexity | Grok |

| Real-time Info Retrieval | 4 | 2 | 2 | 5 | 4 |

| Literature Summarizing | 5 | N/A | 4 | 3 | 3 |

| Collaboration & Communication | 4 | 3 | 3 | 4 | 2 |

| Title Creation | 3 | 4 | 3 | 2 | 3 |

| Content Outlining | 4 | 5 | 4 | 4 | 4 |

| Scientific Text Revision | 4 | 4 | 5 | 4 | 4 |

| Image Generation | 3 | 2 | N/A | N/A | N/A |

| Presentation Preparation | 3 | 3 | 3 | 5 | 3 |

| Project Management | 5 | 3 | 4 | 5 | 4 |

| Overall | 3.89 | 3.25 | 3.5 | 4 | 3.375 |

Here’s a summary of each AI tool’s strengths and weaknesses based on the testing results:

Real-Time Information Retrieval

- ChatGPT (4/5): Strong at providing relevant and understandable summaries but struggles with citing the most up-to-date sources.

- Claude (2/5): Weak at fetching current information and lacks specific recent data, limiting its utility in time-sensitive inputs.

- Gemini (2/5): Limited in real-time responsiveness and failed to deliver useful recent insights.

- Perplexity (5/5): Excellent at real-time information retrieval with precise and up-to-date results by providing a list of relevant, credible sources.

- Grok (4/5): Generally accurate in real-time lookup, though occasionally misses nuance or depth.

Literature Summarizing

- ChatGPT (5/5): Provides accurate and comprehensive summaries with no factual errors.

- Claude (N/A): Unable to access the link provided.

- Gemini (4/5): Offers solid summaries but slightly lacks in detail and rewording precisely.

- Perplexity (3/5): Can be helpful but often misses deeper understanding or synthesis of the paper.

- Grok (3/5): Functional summarizer but oversimplifies and may skip important points.

Collaboration & Communication

- ChatGPT (4/5): Creates professional, useful templates but lacks personal tailoring.

- Claude (3/5): Offers adequate communication output but needs improvement in tone and clarity.

- Gemini (3/5): Mediocre in crafting messages that feel natural or context-specific.

- Perplexity (4/5): Strong at structuring messages with clarity, though still generic.

- Grok (2/5): Often too brief or awkwardly phrased for collaborative writing.

Title Creation

- ChatGPT (3/5): Titles are functional but often generic or unoriginal.

- Claude (4/5): Generates more creative and targeted titles than others.

- Gemini (3/5): Title suggestions are serviceable but lack uniqueness.

- Perplexity (2/5): Effective at generating diverse options, though still lacking creatively.

- Grok (3/5): Titles tend to be plain and not especially engaging.

Content Outlining

- ChatGPT (4/5): Provides comprehensive and structured outlines with clear flow.

- Claude (5/5): Excels with thoughtful, detailed breakdowns ideal for content planning.

- Gemini (4/5): Gives organized outlines but misses finer nuances or citations.

- Perplexity (4/5): Strong at outlining logical sequences with usable structure.

- Grok (4/5): Well-structured and informative outlines but lacks citation support.

Scientific Text Revision

- ChatGPT (4/5): Rewrites are clear and more concise but can lose scientific tone.

- Claude (4/5): Effective balance between clarity and technical language.

- Gemini (5/5): Excellent at maintaining scientific integrity while improving readability.

- Perplexity (4/5): Generally accurate but occasionally overly simplified.

- Grok (4/5): Provides clean revisions, though with some technical imprecision.

Image Generation

- ChatGPT (3/5): Capable of producing decent visuals but prone to inaccuracies.

- Claude (2/5): Struggles with visual accuracy and coherence.

- Gemini (N/A): Unable to generate images.

- Perplexity (N/A): Unable to generate images.

- Grok (N/A): Unable to generate images.

Presentation Preparation

- ChatGPT (3/5): Creates usable outlines but often too vague for advanced needs.

- Claude (3/5): Presentations are clear but lack visual guidance or creative depth.

- Gemini (3/5): Provides structured slides, but misses engagement-enhancing details.

- Perplexity (5/5): Excels at converting content into actionable, clear slides with structure.

- Grok (3/5): Slides are passable but tend to lack polish or supporting visuals.

Project Management

- ChatGPT (5/5): Offers highly detailed timelines with roles and dependencies clearly laid out.

- Claude (3/5): Covers the basics well but lacks structured formatting and detail.

- Gemini (4/5): Provides good planning support but not as in-depth or tabular.

- Perplexity (5/5): Delivers excellent organization and actionable tasks.

- Grok (4/5): Supports planning well with broad detail but less detail in task breakdowns.

General Overview

ChatGPT (OpenAI): 3.89/5

Strengths:

- Performed well at finding and simplifying scientific findings for readers.

- Created excellent project timelines.

Weaknesses:

- Struggles with creating creative content.

- Limited in producing high-quality, accurate scientific diagrams.

Claude (Anthropic): 3.25/5

Strengths:

- Great at structuring complex scientific concepts while maintaining clarity.

- Retains key information while making text more condensed.

Weaknesses:

- Poor access to or interpretation of up-to-date clinical trial data.

- Struggles to break down longer-term projects into actionable timelines.

- Weak or inaccurate in creating visual scientific content.

Gemini (Google DeepMind): 3.5/5

Strengths:

- Strong at enhancing clarity without losing scientific rigor.

- Reliable for organizing and simplifying complex topics.

Weaknesses:

- Struggles with accessing current web data through links.

- Lacks polish in making content for audience-specific formats.

- Cannot generate diagrams or images.

Perplexity: 4/5

Strengths:

- Best performer at accessing and summarizing up-to-date scientific information.

- Excels at identifying relevant data and translating it into audience-specific content formats.

Weaknesses:

- May misinterpret or miss key findings in scientific papers.

- Because it sources a lot of information at once, it may source irrelevant content.

- Cannot generate diagrams or images.

Grok (xAI): 3.375/5

Strengths:

- Performs well with current data access.

- Reliable with content organization and technical clarity.

Weaknesses:

- Weak in tone and professionalism for scientific emails.

- Inconsistent extraction of core ideas.

- Cannot generate diagrams or images.

Conclusion

Final Rankings and Main Strengths:

- Perplexity: Real-time Information Retrieval, Presentation Preparation

- ChatGPT: Literature Summarizing, Project Management

- Gemini: Scientific Text Revision

- Grok: Real-time Information Retrieval

- Claude: Content Outlining

Interestingly, Perplexity ended up ranking the highest among the other AI models, and it’s likely because of its verifiable and sourced responses, which earns them points on scientific accuracy. Claude ended up ranking the lowest because its responses were often too convoluted or long, which undermined the entire point of using an AI to summarize or make concepts easier to grasp. However, these results are biased in favor of a scientific perspective, which often values scientific accuracy and clarity over other personalized ideals.

To see an example of a life science content AI in action, check out this video:

It’s important to note how even five AI models are unable to cover all of the scientific content creation needs. This is because despite each model possessing their own individual strengths, many tasks still require human insight, especially when it comes to creative aspects, visual images, and being able to empathize with an audience. In the rapidly evolving world of life science content creation with AI, it is important to note how co-pilots are never perfect in their own way and in its current state, needs human involvement and management. But, if combined by selecting their strengths, could lead to versatile and strong assistants in the future of scientific-related projects.

Moving forward, AI will continue to evolve and expand their capabilities. Because of this, it is important to continue testing using our rubric and develop a greater understanding of what has changed. In the near future, it is clear to say that AI will become better assistants, and weaknesses may become improved. The scope of our testing can be increased through considering different perspectives, besides just the scientific one. Ultimately, we hope that this blog can help to provide a baseline guide as to how we can continue testing AI to determine what is best in our lives and our future.