By Nylah Williams, a Human Biology major with a Global Health minor at the University of California, San Diego, Class of 2026.

Top 3 Things You’ll Learn in this Blog:

- The scientific AI tool you choose is more efficient depending on the task at hand.

- A hybrid AI workflow produces more accurate and higher quality life science content.

- Claude is the most balanced AI augmentation AI tool.

Abstract

Scientific content creation demands significant time and expertise. Generative AI tools promise to streamline this process, but choosing the right tool requires understanding their specific strengths and limitations. This study evaluates five leading AI platforms, ChatGPT, Claude, Gemini, Perplexity, and Grok, across critical life science content tasks: data retrieval, figure generation, and topic synthesis. Rather than declaring a universal winner, this analysis reveals that each AI tool excels in distinct areas: some prioritize structured reasoning, others enable sophisticated image generation, and a few handle citations more reliably. By mapping these strengths to specific life science content workflow stages, researchers and science communicators will build hybrid approaches that deliver faster, more accurate, and genuinely creative content.

This guide transforms AI from a one-tool-fits-all solution into a strategic asset for modern science communication.

Introduction

The emergence of large language models (LLMs), refined through Reinforcement Learning from Human Feedback (RLHF), has fundamentally changed how scientists and communicators develop and present their work. Yet with multiple platforms now competing for attention, ChatGPT, Claude, Gemini, Perplexity, and Grok, content creators face a practical question: Which tool should I use, and when? And how do I leverage these powerful tools while also being genuine, creative, safe and accurate.

Each platform brings distinct capabilities. Some excel at synthesizing complex concepts into clear explanations, others generate publication-quality figures, and a few provide real-time access to the latest research. But these strengths rarely align in a single tool.

This analysis explores how each AI model performs on authentic life science content tasks. By systematically comparing these tools within a realistic content development workflow, from research and drafting through visualization and refinement, we can identify the optimal role each plays. The result is a practical, hybrid approach to AI-assisted science communication that combines speed with accuracy and creativity.

Methods

We began with a general educational exploration of each AI platform to understand its unique interface, capabilities, and limitations. This initial phase allowed us to establish baseline familiarity before testing specific scientific content tasks, ensuring a fair and context-aware evaluation across all tools.

| AI Tool | Unique Attribute | What it is best at |

| ChatGPT | Analysis and Reasoning. Very methodical in breaking down why it selects specific references. | In-depth analysis and explanations. Excellent at justifying reference choices and providing detailed breakdowns of complex topics. |

| Claude | Extremely personalized experience. Shows its reasoning process and code. Seeks to build rapport with users. | Step-by-step walkthroughs and visual demonstrations. Strong at creating detailed process models (like plasmid cloning). Personalized interactions. |

| Gemini | Strong visual generation capabilities. Trained on publicly available code datasets. | Image creation and visual content generation. Best for producing diagrams and illustrations. |

| Perplexity | Built-in source verification with “check sources” feature. Focuses on clinical/applied contexts. | Literature retrieval with citations. Excellent at finding recent papers from high-quality journals (Nature Reviews). Real-time information access with source tracking. |

| Grok | Access to real-time Twitter/X data and user-generated content. | Social media integration and simple image tasks. Good for finding foundational/classic papers, though tends toward older sources. |

Topic Selection

To evaluate AI tools within realistic life-science communication workflows, I selected protein degradation and plasmid cloning as representative test topics. Protein degradation encompasses intricate molecular mechanisms (such as the ubiquitin-proteasome system) and abundant current literature, ideal for testing citation retrieval and synthesis accuracy. Plasmid cloning demands clear visual explanation of multi-step processes, making it an excellent idea for evaluating each tool’s ability to generate and explain scientific figures.

| Content Stage | Prompt Used |

| Create Catchy Blog title | Generate an engaging, search-optimized title for a scientific article on protein degradation. |

| Blog outline | Create an outline for a blog explaining protein degradation to life-science audiences |

| Scientific literature retrieval | Retrieve papers exploring protein degradation |

| Real time market retrieval | Summarize recent biotech news or companies focusing on protein degradation therapies |

| Synthesis of material | Synthesize recent findings on protein degradation into a concise blog section. |

| Improve writing/sentence structure | Improve clarity and sentence structure for this paragraph on protein degradation. |

| Image Creation | Create an image of a model showing how a gene of interest is cloned into a plasmid. Use 1850pixels x 950pixels dimension. |

| Final enhancement, ideas for improvement | Suggest improvements to this draft blog for engagement and accuracy. |

Evaluation Criteria

Each AI tool was scored based on:

- Accuracy: For image creation, models were evaluated on inclusion of key elements (EcoRI restriction sites, sticky ends, ligation steps). For literature retrieval, papers were assessed for relevance to ubiquitin-proteasome pathways rather than general apoptosis.

- Recency: Publication dates of retrieved papers (2020+).

- Depth: Level of detail and explanation provided.

- Usability: Ease of integrating outputs into a content workflow.

- Citation Quality: Proper sourcing and ability to verify references.

Results

Key Observations:

- Image Accuracy: All tools somewhat correctly identified key plasmid cloning elements (restriction sites, sticky ends, ligation). Gemini produced the most polished visual output, while Claude provided the most detailed step-by-step explanation alongside its image.

- Literature Relevance:

- ChatGPT focused on foundational work (ubiquitin system discovery, autophagy guidelines)

- Perplexity and Claude emphasized recent therapeutic applications (targeted protein degradation, 2024-2025)

- Grok retrieved outdated sources (1996-2003), limiting its utility for current research

- Citation Verification: Only Perplexity offered a “check sources” feature, though it added more references rather than verifying accuracy. ChatGPT provided the most context for why each paper was selected.

- Workflow Integration: Claude’s personalized approach and visible reasoning made it ideal for collaborative content development. ChatGPT’s analytical depth suited technical writing. Perplexity’s citation format was ready for bibliographies.

| AI Tools | Chat GPT | Claude | Gemini | Perplexity | Grok |

| Create Catchy Blog Title | Good – analytical approach | Good – creative and personalized | Good – standard suggestions | Moderate – focused on accuracy | Moderate – casual tone |

| Blog Outline | Excellent – structured, methodical | Excellent – detailed walkthroughs | Good – organized categorization | Good – research-focused structure | Fair – basic organization |

| Scientific literature retrieval | Good – Accurate and recent | Good – Accurate and recent | Bad – Outdated | Excellent – fact checking integration | Bad – not built for this command |

| Real time information retrieval | Moderate – no built-in web access | Moderate – can search when needed | Fair – integrated search | Excellent – built for this with citations | Good – X/Twitter integration |

| Synthesis of material | Excellent – classic foundational papers with context | Good – detailed step-by-step visual | Good – organized by themes/categories | Bad – sourced existing images instead | Fair – better for simple tasks |

| Improve writing/sentence structure | Good – clear explanations | Excellent – personalized, rapport-building | Fair – standard interaction | Good – clinical focus | Fair – outdated sources (1990s-2000s) |

| Image Creation | Excellent – accurate and timely | Good – detailed step-by-step visual | Fair – retrieves already-made images from search engines | Fair – focuses on visual > accuracy | Fair – better for simple tasks |

| Final enhancement, ideas for improvement | Excellent – strong analytical feedback, logical restructuring, and clear suggestions to improve rigor and flow | Excellent – highly personalized, collaborative feedback with transparent reasoning and audience-aware suggestions | Good – helpful high-level suggestions, but less depth in scientific nuance and accuracy checks | Good – improvement ideas grounded in cited literature, though less focused on narrative flow or engagement | Fair – general suggestions, limited scientific depth, and less useful for refining academic-style content |

Claude Emerged as the Most Balanced with ChatGPT a Close Second

Claude stood out as the most balanced, human-centered agent for scientific content creation. Beyond providing accurate and detailed responses, it has a highly personalized and transparent workflow as far as: showing its reasoning, sharing its code, and adapting explanations to the user’s style and needs. This made it especially good for collaborative, step by step science communication tasks, such as modeling experimental processes like plasmid cloning.

Recently Anthropic has made improvements to Claude, specifically, its integration with scientific databases like PubMed which have significantly expanded its ability to retrieve and synthesize up to date research. This allows Claude to not only generate content but also verify findings with current literature.

While other tools excel in specific areas: ChatGPT in structured analysis, Gemini in visuals, and Perplexity in citation tracking, Claude provides the most well-rounded, intuitive, and research-informed experience. Its blend of depth, personalization, and data expansion makes it the most effective AI for developing scientifically rigorous and communicative content.

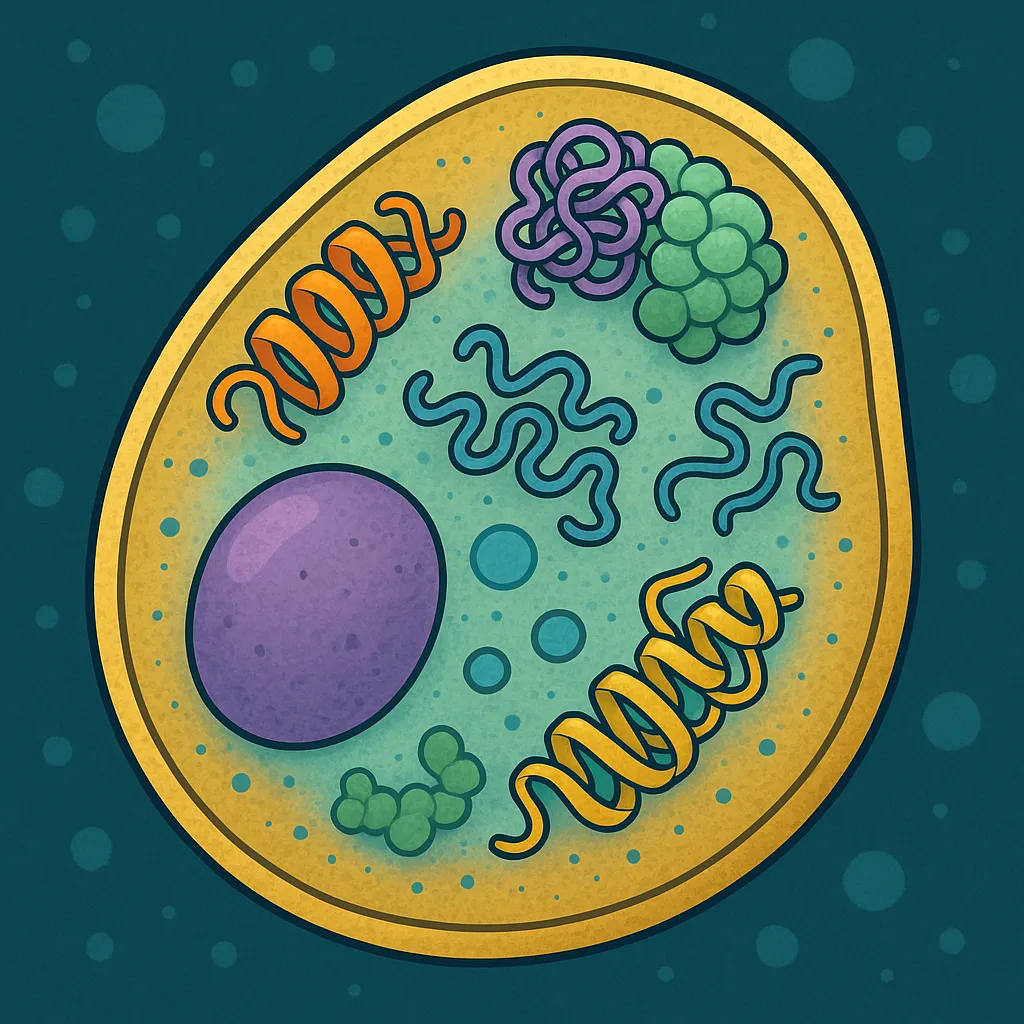

Case Study: How Proteins Power Yeast: A Molecular Look Inside the Cell

To evaluate this defined protocol in a real-world setting, we developed a blog using the most suitable tool for each step, along with tailored prompts. This case study applied the complete workflow to assess performance across tools, showcasing how a collaborative human-AI approach can speed up scientific content creation while preserving both accuracy and creativity.

| Content Stage | Best Tool | Prompt |

| Blog Title Ideation | ChatGPT | I want to write a blog on How Proteins Power Yeast: A Molecular Look Inside the Cell. Provide SEO friendly and engaging titles. |

| Outline Development | Claude | Make an outline for blog ‘insert title that chatGPT gave’ |

| Scientific Literature Retrieval | Claude (Life Science Mode) | Find some current scientific literature from top journals, Nature, Science, etc. that support this blog outline. |

| Real-Time Market Insights | Perplexity | Provide current news relating to this blog ‘insert blog outline’ |

| Insights | ||

| Synthesis and Drafting | Claude | Make a first draft of this blog and/or fine tune the writing for this blog. Keep it at 500 words. |

| Primary Writing | Human (Nylah) | n/a |

| Sentence Refinement & Grammar | ChatGPT | Improve the writing in this blog so it’s more engaging and interesting and makes more sense.Insert blog. |

| Image Generation | ChatGPT | Create an image for this blog that is fun yet professional. |

| Final Enhancements & Creative Review | Claude + ChatGPT | Suggest improvement for this blog that will make it more interesting, discoverable and educational to early career scientists. |

Blog Result

Title: The Hidden World of Yeast Proteins and How They Shape Cellular Life

When you think of yeast, you might picture bread rising or beer fermenting, but beneath these everyday moments lies a microscopic world that has reshaped modern biology. Yeast proteins, the tiny molecular machines inside these single-celled organisms, are helping scientists uncover how all cells work, from microbes to humans.

Why Yeast Became Biology’s Favorite Model

Saccharomyces cerevisiae, a common baker’s yeast, occupies a special place in research. Though it’s just one cell, it’s a eukaryote like us, with cellular machinery that mirrors our own7. Nearly half of yeast proteins resemble human proteins, meaning discoveries in yeast often translate directly to human biology.

A groundbreaking 2023 Nature study mapped almost 4,000 yeast proteins and 31,000 interactions, revealing a dense, interconnected web4. Each protein interacts with an average of 16 others, forming modules that coordinate DNA replication, metabolism, and stress responses. Instead of acting alone, proteins behave like members of a carefully orchestrated network.

The Protein Orchestra Behind Cellular Life

Yeast’s roughly 6,000 protein-coding genes produce a highly dynamic proteome. These proteins assemble into complexes, pass messages, and regulate one another, allowing cells to adapt quickly to shifting environments.

A striking example is the TOR pathway, a master regulator conserved from yeast to mammals. When nutrients are plentiful, TOR promotes growth; when they’re scarce, it activates recycling systems to keep the cell alive3. Because this pathway also influences aging, metabolism, and cancer in humans, studying TOR in yeast provides insights that extend far beyond the petri dish.

From Yeast Models to Human Medicine

Despite its simplicity, yeast has become a powerful tool for understanding human diseases. The cellular systems that maintain protein quality and respond to stress are ancient and deeply conserved. When human disease proteins malfunction in yeast, they often replicate the same toxic behavior seen in patients.

This has led to remarkable discoveries. A yeast model of ALS, for instance, revealed that ataxin-2 is a genetic risk factor for the disease, an insight that emerged from watching protein aggregation in yeast cells1. Today, yeast continues to illuminate mechanisms underlying Alzheimer’s, Parkinson’s, Huntington’s disease, and more.

A Microscopic Organism With a Massive Future

Cutting-edge technologies such as: CRISPR editing, advanced mass spectrometry, and AI tools like AlphaFold, are accelerating discoveries in yeast protein biology. These insights are fueling innovations in biofuel production, pharmaceutical manufacturing, and sustainable food systems.

The next time you watch dough rise, remember that the organisms creating those bubbles are also helping us understand cancer, decode neurodegeneration, and engineer a more sustainable future. In the hidden world of yeast proteins lies a blueprint for how life functions; we are only beginning to read it.

References

- Elden, Amy C., et al. “Ataxin-2 Intermediate-Length Polyglutamine Expansions Are Associated with Increased Risk for ALS.” Nature, vol. 466, no. 7310, 25 Aug. 2010, pp. 1069–1075, doi:10.1038/nature09320.

- Gould, Claire, et al. “An Evolutionary Mechanism to Assimilate New Nutrient Sensors into the mTORC1 Pathway.” Nature Communications, vol. 15, 21 Mar. 2024, article 2517, doi:10.1038/s41467-024-46680-3.

- Goul, Claire, et al. “The Molecular Basis of Nutrient Sensing and Signalling by mTORC1 in Metabolism Regulation and Disease.” Nature Reviews Molecular Cell Biology, vol. 24, no. 12, 26 Sept. 2023, pp. 857–875, doi:10.1038/s41580-023-00641-8.

- Michaelis, André C., et al. “The Social and Structural Architecture of the Yeast Protein Interactome.” Nature, vol. 624, no. 7990, 7 Dec. 2023, pp. 192–200, doi:10.1038/s41586-023-06739-5.

- Ostrowski, Lauren A., et al. “Ataxin-2: From RNA Control to Human Health and Disease.” Genes, vol. 8, no. 6, 5 June 2017, article 157, doi:10.3390/genes8060157.

- Valenstein, Maxwell L., et al. “Structure of the Nutrient-Sensing Hub GATOR2.” Nature, vol. 607, no. 7920, 13 July 2022, pp. 610–616, doi:10.1038/s41586-022-04939-z.

- Bayandina, Svetlana V., and Dmitry V. Mukha. “Saccharomyces cerevisiae as a Model for Studying Human Neurodegenerative Disorders: Viral Capsid Protein Expression.” International Journal of Molecular Sciences, vol. 24, no. 24, 7 Dec. 2023, article 17213, doi:10.3390/ijms242417213.

- Gitler, Aaron D. “Research — Gitler Lab.” Stanford School of Medicine, gitlerlab.org/research. Accessed 25 Nov. 2025.

In Conclusion

This workflow highlighted the value of task-specific AI augmentation. Perplexity proved essential for identifying recent, high-quality literature and building a reliable reference list. ChatGPT excelled at structuring the narrative and weaving foundational studies into a coherent scientific story. Claude added strength in refining explanations, maintaining tone, and ensuring the content remained readable and human centered understanding. Visual oriented tools supported conceptual clarity, but required careful validation to maintain biological accuracy. Together, these tools transformed a complex topic, yeast protein networks, into a scientifically rigorous yet approachable blog post. The outcome reinforces a key takeaway of this study: AI is most effective in science communication not as a replacement for expertise, but as a collaborative system that amplifies human judgment, creativity, and critical thinking. While minor inconsistencies such as reference ordering highlight the continued need for human correction, the overall results underscore AI’s potential to meaningfully accelerate and enhance science communication when applied intentionally.